In the world of High-Frequency Trading, automated applications process hundreds of millions of market signals every day and send back thousands of orders on various exchanges around the globe.

In order to remain competitive, the reaction time must consistently remain in microseconds, especially during unusual peaks such as a “black swan” event.

In a typical architecture, financial exchange signals will be converted into a single internal market data format (exchanges use various protocols such as TCP/IP, UDP Multicast and multiple formats such as binary, SBE, JSON, FIX, etc.).

Those normalised messages are then sent to algorithmic servers, statistics engines, user interfaces, logs servers, and databases of all kind (in-memory, physical, distributed).

Any latency along that path can have expensive consequences such as a strategy making decisions based on an old price or an order reaching the market too late.

To gain those few crucial microseconds, most players invest in expensive hardware: pools of servers with overclocked liquid-cooled CPUs (in 2020 you can buy a server with 56 cores at 5.6 GHz and 1 TB RAM), colocation in major exchange datacentres, high-end nanosecond network switches, dedicated sub-oceanic lines (Hibernian Express is a major provider), even microwave networks.

It’s common to see highly customised Linux kernels with OS bypass so that the data “jumps” directly from the network card to the application, IPC (Interprocess communication) and even FPGAs (programmable single-purpose chips).

As for programming languages, C++ appears like a natural contender for the server-side application: It’s fast, as close to the machine code as it gets and, once compiled for the target platform, offers a constant processing time.

We made a different choice.

For the past 14 years, we’ve competed in the FX algorithmic trading space coding in Java and using great but affordable hardware.

With a small team, limited resources, and a job market scarce in skilled developers, Java meant we could quickly add software improvements as the Java ecosystem has quicker time-to-market than C derivatives. An improvement can be discussed in the morning, and be implemented, tested and released in production in the afternoon.

Compared to big corporations that need weeks or even months for the slightest software update, this is a key advantage. And in a field where one bug can erase a whole year’s profit in seconds, we were not ready to compromise on quality. We implemented a rigorous Agile environment, including Jenkins, Maven, Unit tests, night builds and Jira, using many open source libraries and projects.

With Java, developers can focus on intuitive object-oriented business logic rather than debugging some obscure memory Core dumps or managing pointers like in C++. And, thanks to Java’s robust internal memory management, junior programmers can also add value on day 1 with limited risk.

With good design patterns and clean coding habits, it is possible to reach C++ latencies with Java.

For example, Java will optimise and compile the best path as observed during the application run, but C++ compiles everything beforehand, so even unused methods will still be part of the final executable binary.

However there is one issue, and a major one to boot. What makes Java such a powerful and enjoyable language is also its downfall (at least for microsecond sensitive applications), namely the Java Virtual Machine (JVM):

- Java compiles the code as it goes (Just in Time compiler or JIT), which means that the first time it encounters some code, it incurs a compilation delay.

- The way Java manages the memory is by allocating chunks of memory in its “heap” space. Every so often, it will clean up that space and remove old objects to make room for new ones. The main issue is that to make an accurate count, application threads need to be momentarily “frozen”. This process is known as Garbage Collection (GC).

The GC is the main reason low latency application developers may discard Java, a priori.

There are a few Java Virtual Machines available on the market.

The most common and standard one is the Oracle Hotspot JVM, which is widely used in the Java community, mostly for historical reasons.

For very demanding applications, there is a great alternative called Zing, by Azul Systems.

Zing is a powerful replacement of the standard Oracle Hotspot JVM. Zing addresses both the GC pause and JIT compilation issues.

Let’s examine some of the issues inherent to using Java and possible solutions.

Understanding Java’s Just-In-Time Compiler

Languages like C++ are called compiled languages because the delivered code is entirely in binary and executable directly on the CPU.

PHP or Perl are called interpreted because the interpreter (installed on the destination machine) compiles each line of code as it goes.

Java is somewhere in-between; it compiles the code into what is called Java bytecode, which in turn can be compiled into binary when it deems appropriate to do so.

The reason Java does not compile the code at start-up has to do with long-term performance optimisation. By observing the application run and analysing real-time methods invocations and class initialisations, Java compiles frequently called portions of code. It might even make some assumptions based on experience (this portion of code never gets called or this object is always a String).

The actual compiled code is therefore very fast. But there are three downsides:

- A method needs to be called a certain number of times to reach the compilation threshold before it can be optimised and compiled (the limit is configurable but typically around 10,000 calls). Until then, unoptimised code is not running at “full speed”. There is a compromise between getting quicker compilation and getting high-quality compilation (if the assumptions were wrong there will be a cost of recompilation).

- When the Java application restarts, we are back to square one and must wait to reach that threshold again.

- Some applications (like ours) have some infrequent but critical methods that will only be invoked a handful number of times but need to be extremely fast when they do (think of a risk or stop-loss process only called in emergencies).

Azul Zing addresses those issues by having its JVM “save” the state of compiled methods and classes in what it calls a profile. This unique feature named ReadyNow® means Java applications are always running at optimum speed, even after a restart.

When you restart your application with an existing profile, the Azul JVM immediately recalls its previous decisions and compiles the outlined methods directly, solving the Java warm-up issue.

Furthermore, you can build a profile in a development environment to mimic production behaviour. The optimised profile can then be deployed in production, knowing that all critical paths are compiled and optimised.

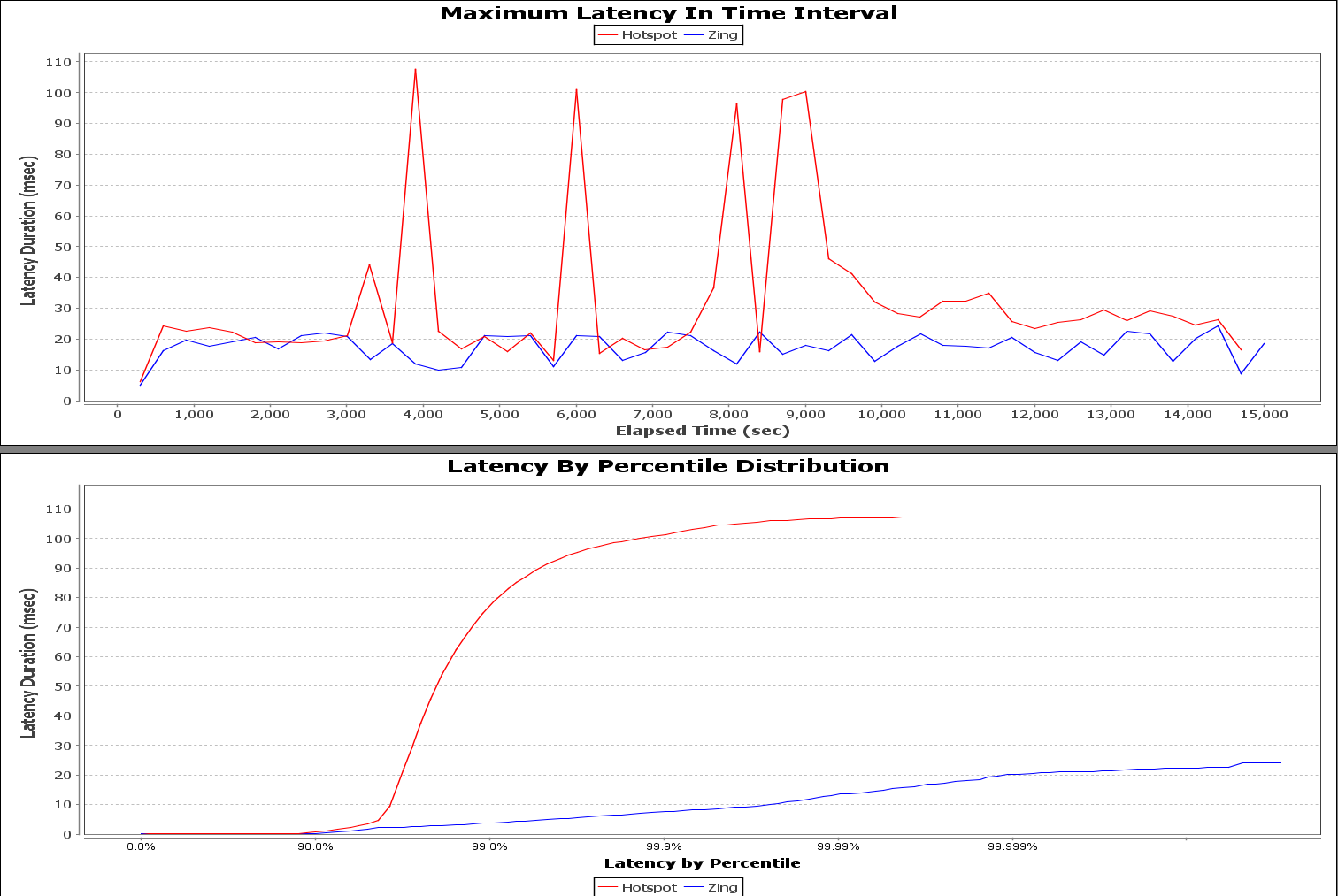

The graphs below show the maximum latency of a trading application (in a simulated environment).

The large latency peaks of the Hotspot JVM are clearly visible while Zing’s latency remains fairly constant over time.

The percentile distribution indicates that 1% of the time, Hotspot JVM incurs latencies 16 times worse than Zing JVM.

Addressing Garbage Collection (GC) pauses

Second issue, during a garbage collection, the whole application could freeze for anything between a few milliseconds to a few seconds (the delay increases with code complexity and heap size), and to make the matter worse, you have no way of controlling when this happens.

While pausing an application for a few milliseconds or even seconds may be acceptable for many Java applications, it is a disaster for low-latency ones, whether in automotive, aerospace, medical, or finance sectors.

The GC impact is a big topic among Java developers; a full garbage collection is commonly referred to as a “stop-the-world pause” because it freezes the entire application.

Over the years, many GC algorithms have attempted to compromise throughput (how much CPU is spent on the actual application logic rather than on garbage collection) versus GC pauses (how long can I afford to pause my application for?).

Since Java 9, the G1 collector has been the default GC, the main idea being to slice up GC pauses according to user-supplied time targets. It usually offers shorter pause times but at the expense of lesser throughput. In addition, the pause time increases with the size of the heap.

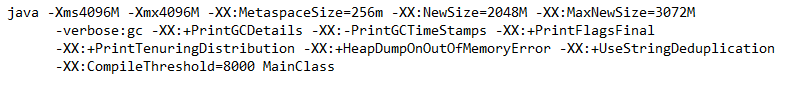

Java offers plenty of settings to tune its garbage collections (and the JVM in general) from the heap size to the collection algorithm, and the number of threads allocated to GC. So, it’s quite common to see Java applications configured with a plethora of customised options:

Many developers (including ours) have turned to various techniques to avoid GC altogether. Mainly, if we create fewer objects, there will be fewer objects to clear later.

One old (and still used) technique is to use object pools of re-usable objects. A database connection pool, for example, will hold a reference to 10 opened connections ready to use as required.

Multi-threading often requires locks, which cause synchronisation latencies and pauses (especially if they share resources). A popular design is a ring buffer queue system with many threads writing and reading in a lock-free setup (see the disruptor).

Out of frustration, some experts have even chosen to overwrite the Java memory management altogether and manage the memory allocation themselves, which, while solving one problem, creates more complexity and risk.

In this context, it became evident that we should consider other JVMs, and we decided to try out Azul Zing JVM.

Quickly, we were able to achieve very high throughputs with negligible pauses.

This is because Zing uses a unique collector called C4 (Continuously Concurrent Compacting Collector) that allows pauseless garbage collection regardless of the Java heap size (up to 8 Terabytes).

This is achieved by concurrently mapping and compacting the memory while the application is still running.

Moreover, it does not require any code change and both latency and speed improvements are visible out of the box without the need for a lengthy configuration.

In this context, Java programmers can enjoy the best of both worlds, the simplicity of Java (no need to be paranoid about creating new objects) and the underlying performance of Zing, allowing highly predictable latencies across the system.

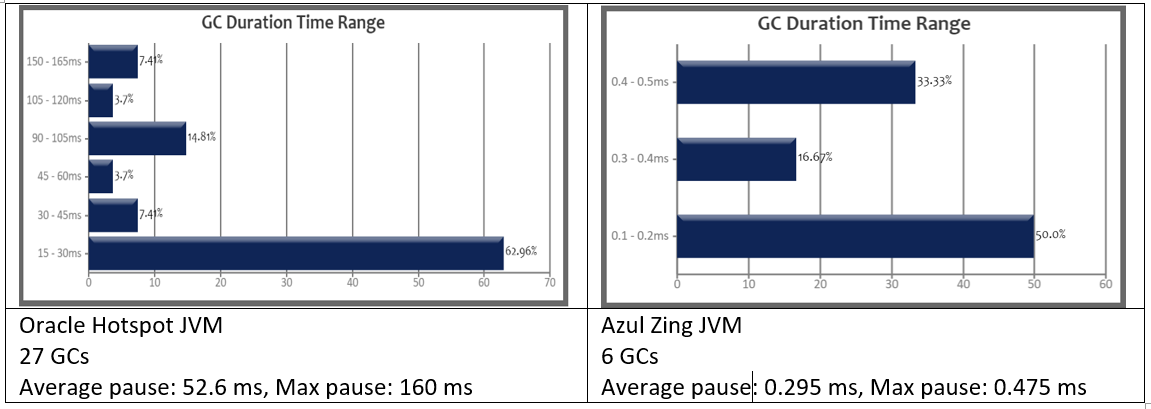

Thanks to GC easy, a universal GC Log analyser, we can quicky compare both JVMs in a real automated trading application (in a simulated environment).

In our application, GCs are about 180 times smaller with Zing than with the standard Oracle Hotspot JVM.

Even more impressive, is that while GC pauses usually correspond to actual application pause times, Zing smart GC usually happens in parallel with minimal or no actual pause.

In conclusion, it is still possible to achieve high performance and low latency while enjoying the simplicity and business-oriented nature of Java. While C++ is used for specific low-level components such as drivers, databases, compilers, and operating systems, most real-life applications can be written in Java, even the most demanding ones.

This is why, according to Oracle, Java is the #1 programming language, with millions of developers and more than 51 billion Java Virtual Machines worldwide.

Used with permission and thanks, originally written by Jad Sarmo, Head of Technology at Dsquare Trading Ltd, and published on medium.