In many ways, the Java Virtual Machine (JVM) is like the main character from the movie Memento. The hero of the film has no short-term memory. He wakes up every ten minutes or so with no idea what he was doing before, what’s going on around him, and must piece it together based on his surroundings and clues he has tattooed on his body.

Each JVM’s run is somewhat similar, especially when it comes to JIT compilation and warmup. The JVM has very little idea what it did the last run. It has no idea what the other JVMs around it are doing. It must figure out how to optimize the code it is running based only on the clues it has – in this case, running the application in slow interpreted mode while building a profile of the hot methods and VM state.

So a JVM wakes up and says, “What am I doing now? I guess I’m running this thing called Kafka. Never heard of it! I’d better run it in the interpreter mode and start figuring out what methods are important here.” This is even though the JVM and its containerized brethren have run Kafka a hundred times before.

Why care about this? Well…

- Slow warmup – It makes your JVM slow to get up to speed, which results in worse end-user experiences for the users of those VMs. Whether you’re scaling out instances of a game engine to meet a spike in players or scaling out shopping cart instances during a big sale, users who end up on JVMs that aren’t warmed up yet get a worse experience and are more likely to leave.

- CPU spikes – It causes longer periods of high CPU spikes as the JVM tries to handle both JIT optimization and your application logic. This can play havoc with your load balancers and auto-scalers.

- Latency – Because traditional JIT optimizes for what it sees right now instead of what it knows it is likely to see over the whole life of the JVM, you are more likely to get deoptimization storms that can lead to latency outliers.

- Operational headaches – It causes operational headaches as engineering teams have to manually warm up JVMs with “fake” transactions and other tricks. This leads to less willingness to aggressively take advantage of cloud elasticity by scaling up resources exactly when you need them.

- Cost – It costs more money because at the end of the day, the answer to all the problems listed above is to overprovision resources and reduce elastic scaling to compensate for Java’s slow warmup curve.

“Java’s warmup problem has long been an issue in ensuring peak application performance,” said William Fellows, Research Director at 451 Research. “Organizations should consider ways to reduce operational friction by automating the selection of the best optimization patterns for container-based applications while also improving elasticity to control cloud costs.”(1)

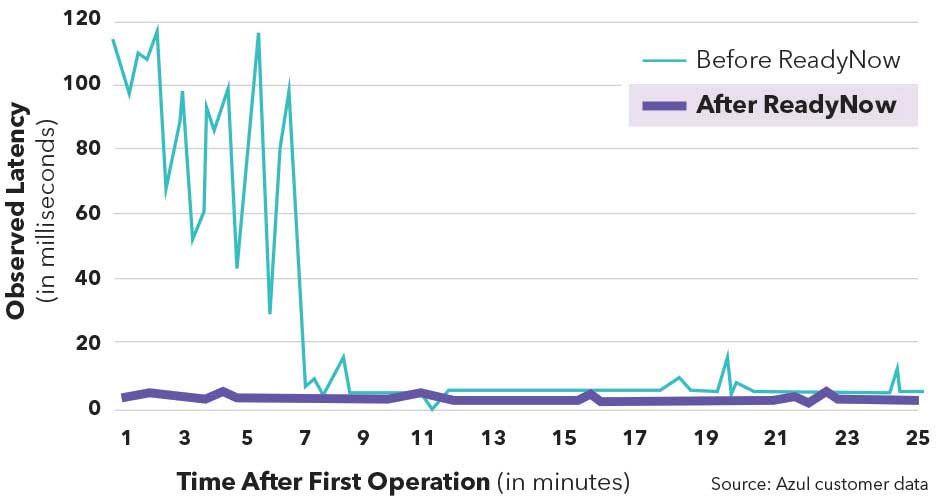

Azul Platform Prime, Azul’s optimized builds of OpenJDK, includes a technology called ReadyNow which records all the necessary profiling information from a JVM’s run so that, on the next run, the JVM has everything it needs to skip the profiling stage and start compiling immediately. Whenever possible, the JVM compiles methods before the main method is executed. Everything else it compiles as soon as the method is “touched” by the application and the JVM has the required state information to perform the compilation.

At Azul, we have been building the notion of cloud-native JVMs. A cloud-native JVM is one that doesn’t just live in isolation, but instead can:

- Collaborate with other JVMs running in the cloud by learning from the experience of other JVMs running a set of code

- Take advantage of cloud-native services to offload things that used to be done locally to dedicated (and better) services

- Take advantage of the elasticity of the cloud by bringing more resources to bear when they are needed and scaling them down to near zero when they are not, thereby delivering higher value and cost efficiency than when handling all of these tasks with only local resources

Our first offering toward a cloud-native JVM was the Cloud Native Compiler, which offloads JIT compilation to dedicated hardware and can leverage a cache of previous compilations to save time and resources in warming up your JVM.

Today we are announcing the next step in services that could support cloud-native JVMs: ReadyNow Orchestrator. First, we removed much of the operational friction from working with ReadyNow. Before, you had to manually save a ReadyNow profile to change your deployment process just to make the saved profile available to JVMs when they start. Now, ReadyNow Orchestrator handles ReadyNow profile reading and writing directly. You only have to provide a few command-line parameters, and RNO automatically records ReadyNow profiles from multiple JVMs and picks the best one to serve when a new JVM asks for one.

Just as important, ReadyNow profiles have been optimized to work with the Cloud Native Compiler and especially the server-side cache of previous compilations. When you use ReadyNow profiles with the CNC compiler, you not only remember what compilations a JVM needs, you can pull more of them from the CNC cache instead of compiling them again. The result is faster time to speed and lower infrastructure costs for serving up compilations.

Our full set of cloud-native JVM services is now packaged under the umbrella of Optimizer Hub. The Optimizer Hub is a component of Azul Platform Prime. It is free to use for any Azul Platform Prime customer – there is no additional license or contract to sign. Azul Optimizer Hub is distributed as a Kubernetes cluster that you provision and maintain in your cloud or on-premise environment.

1 451 Research, part of S&P Global Market Intelligence, Application Modernization: It Takes a (Cloud) Village, 2022

Profile Trouble?

Let ReadyNow Orchestrator automate and choose profiles for your JVMs.