When a vulnerability detection tool collects security information, timing matters. If the tool looks at the filesystem rather than watching the application as it runs, it might see all the components, including ones that are not actually used in production. The result is often an avalanche of false positives that makes detection tools almost unusable. But there is an approach that just might work.

If you’re trying to protect your customers from common vulnerabilities and exposures (CVEs), there is a Goldilocks zone of effective detection. Tools that look in the wrong environments or at the wrong time in software development and deployment can report false negatives, leaving companies running compromised code. They can also report false positives, producing alerts and notifications so overwhelmingly numerous that they become more noise than signal.

In this blog post we will look at Java applications and some sources of false positives, the consequences of alerts gone wild, and an approach that we believe might be more effective.

Looking for CVEs in all the wrong places

CVEs can live in the Java runtime and third-party components, including libraries, frameworks, and infrastructures. Detection tools are static, and they detect whether vulnerabilities are present. By focusing on the existence of vulnerabilities, whether those vulnerabilities are running in production or not, they produce an overload of false positives. Java is fast, and it has earned the nickname “hot spot” because it focuses on optimizing code that runs (JIT) rather than all code.

Another shortcoming is looking in test environments. If a tool only watches test environments run, it may find that they are either vulnerable or safe. But without knowing whether the same code is running in production, they make educated guesses rather than reporting accurate results.

What’s the big deal about false positives?

For security teams, false positives can be debilitating. Here are some of the ramifications of false positives:

Alert fatigue reduces the effectiveness of security teams

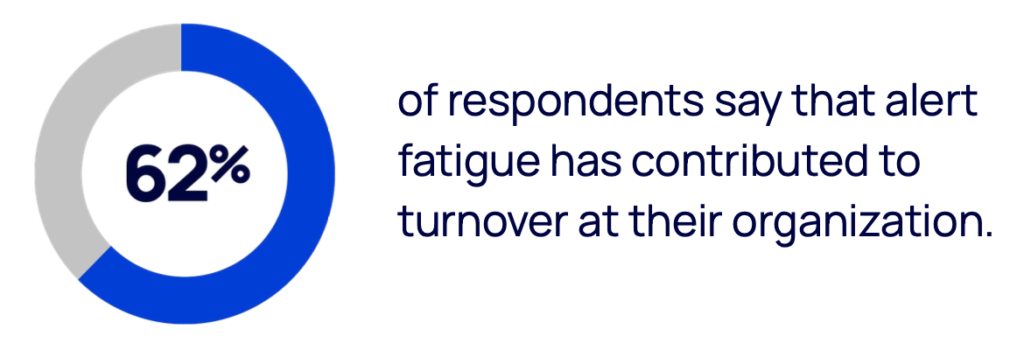

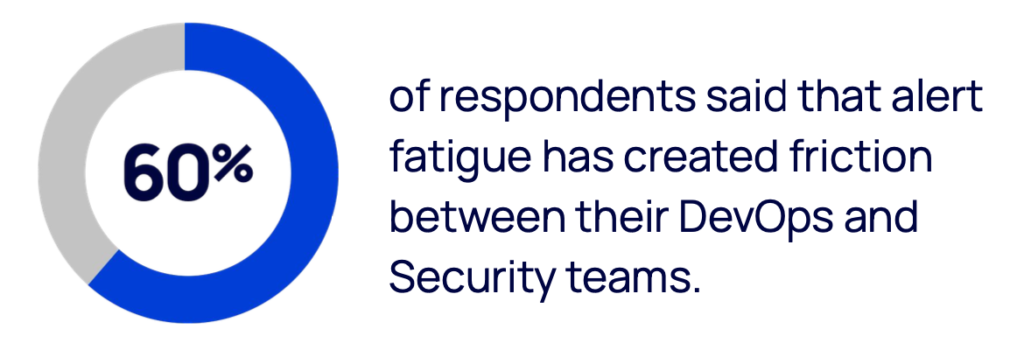

Orca Security’s 2022 Cloud Security Alert Fatigue Report reports that cloud security professionals are joining their counterparts in IT security, getting beaten down by an overwhelming number of irrelevant or unnecessary alerts. Orca defines alert fatigue as “when security professionals are exposed to a large number of often meaningless, unprioritized security alerts and consequently become overwhelmed.”

Alert overload is having a material impact on security teams:

- 59% receive more than 500 public cloud security alerts per day

- 55% say critical alerts are being missed, often on a weekly and even daily basis

- 62% say alert fatigue has contributed to turnover

- 60% say alert fatigue has created internal friction in their organization

Vulnerabilities cause damage to internal systems and customers

When Target was breached in 2013, FireEye sent alerts, but employees missed the signals amid the noise of irrelevant notifications. The flurry of alerts often includes not just false alerts but also duplicate notifications and the noise of regular system updates.

Thieves stole 40 million credit and debit records and private data from 70 million customers. In a high-profile incident. From a retail company. Just before Black Friday. Ouch.

Companies suffer reputation damage and lose money

The Target data breach was one of the biggest security breaches in history. Target was required to pay an $18.5 million settlement. That was just the beginning.

The company lost more than $200 million by some estimates,. Customers lost faith in Target temporarily as the data breach hit the mainstream media. Earnings reportedly fell 46% following the attack as Target’s reputation took a beating and the CEO was fired.

Current approaches to vulnerability detection

Detection tools use two major approaches to vulnerability detection, Application Security Testing (AST) and Software Composition Analysis (SCA). Software Composition Analysis (SCA) identifies the components and libraries used by software and maps them against a list of known vulnerabilities to determine whether CVEs are present. Application Security Testing (AST) is a technique that monitors application execution within the runtime to determine if an application is vulnerable. It requires some actor (such as a user, a QA process, a tool) to execute the code.

AST can be static, dynamic, or interactive, as follows:

- Static AST (SAST) analyzes an application’s source, bytecode or binary code for security vulnerabilities typically at the programming and/or testing software life cycle (SLC) phases

- Dynamic AST (DAST) analyzes applications in their dynamic, running state during testing or operational phases. DAST simulates attacks against an application (typically web-enabled applications and services), analyzes the application’s reactions and, thus, determines whether it is vulnerable

- Interactive AST (IAST) combines elements of SAST and DAST simultaneously. It is typically implemented as an agent within the test runtime environment (for example, instrumenting the Java Virtual Machine [JVM] or .NET CLR) that observes operation or attacks and identifies vulnerabilities

Where to next?

Companies that run Java applications already deploy and manage a Java runtime environment (JRE) to run those applications. External security tools often fall into a responsibility gap between the application security team (who owns the tool and budget) versus the developer/operations team (who owns the application environment), increasing the challenge of staying safe from threats like Log4j.

Remember Log4j? It’s a ubiquitous, seemingly innocuous piece of software that’s used by almost every Java instance. When a vulnerability was discovered buried inside Log4j last year, more than 800,000 exploitation attempts were detected in the first 72 hours, according to cybersecurity firm Check Point. A cybersecurity panel created by U.S. President Joe Biden calls the Log4j vulnerability an “endemic” problem that will likely pose security risks for more than a decade.

Companies became exposed to it through libraries and frameworks even if they didn’t write code that used it. Fortunately, it doesn’t affect the logging library included in OpenJDK, and Azul customers were safe. Learn more in Simon Ritter’s blog post.