Summary

Many organizations seek to control and reduce their cloud compute costs, and various, often complementary, techniques can be applied to that goal. Running your applications on a better JVM without changing code or re-architecting is a quick, low-effort, low-investment way to reduce compute costs and improve efficiency in Java and JVM-based applications.

In this post, you will learn about the three main ways you can use better JVMs to reduce systemic cloud compute costs in Java and JVM-based applications and infrastructure:

- Speed: A JVM that runs application code faster can reduce the amount of CPU used to get the same amount of work done

- Consistency: A JVM that improves execution consistency at higher utilization levels enables organizations to raise their utilization targets, extracting more work out of each vCPU they pay for

- Elasticity: A JVM that improves warmup by reducing its associated service-level-impacting artifacts allows for increased elasticity without hurting traffic. You can turn off compute resources more aggressively when they are not needed or are underutilized

We’ll look at each of these and depict how they can be used to reduce costs.

One of the most obvious ways to reduce compute costs is to improve each application's performance, so it can deliver the same amount of work with fewer resources. While re-engineering application code in each application to make them more efficient can sometimes lead to significant gains, there are quicker, more systemic ways to improve performance and reduce costs.

The most obvious and most immediate way to improve application performance is to run the same application code on faster or cheaper compute infrastructure.

So where does faster and cheaper compute infrastructure come from?

The vCPUs we get to run on generally get faster and faster over the years. When faster vCPUs become available at the same per-vCPU price (or even at better vCPU-speed-per-dollar spent), moving your cloud compute consumption to use those newer vCPUs as a means of cost reduction is obvious. Choosing the opposite is usually a waste.

The same is true when cheaper vCPUs become available that can deliver the same performance. This sometimes occurs with vCPU-architecture or vendor shifts (e.g. x86-64 and Aarch64, Intel vs. AMD). But it also happens over time within a given architecture and hardware vendor, as more and more vCPUs can fit into silicon chips through the natural progression of Moore’s law.

The most obvious and most immediate way to improve application performance is to run the same application code on faster or cheaper compute infrastructure.

Java and JVM-based applications can take advantage of such shifts in the underlying vCPUs because in most cases, the same Java code can run on virtually all cloud compute instance types. And since the underlying JVMs will adapt the application code to both run on and optimize for whatever hardware they land on, no re-compilation is needed to adapt to changing hardware choices. Customers can choose the instance types that will deliver the best “bang for the buck,” or work-per-dollars-spent at a given point in time, and to shift between them as those metrics change and evolve over time.

How your choice of JVM affects cloud costs

Perhaps less obvious is the choice of the JVM used to run on those vCPUs. That choice can also deliver faster and cheaper compute infrastructure. A JVM that can execute the same application code faster, on the same vCPU, will [obviously] deliver cost reductions in the same way that faster and cheaper vCPUs do.

The speed gap between JVMs has now grown to be bigger than the speed gaps between consecutive vCPU generations and between vCPU architectures. This makes the choice of JVM as powerful as the choice of CPU when it comes to containing or reducing cloud compute costs.

Note that this is not an either-or choice. The benefits of choosing better vCPU-speed-per-cost instance types and faster JVMs are complementary and additive. In fact, the faster JVMs are usually also better at exposing additional speedups with new vCPUs, as they tend to better optimize for their newer features and capabilities.

In summary. The benefits of running on a faster JVM are just as obvious as running on a faster vCPU. Not doing so is a waste.

A common reality in cloud compute

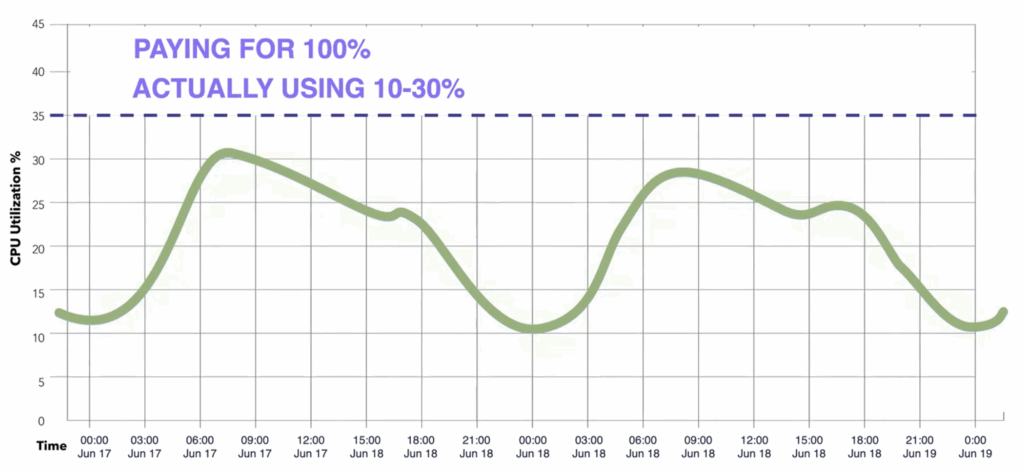

Let’s look at a common example of utilization and resources used in a cloud environment. The chart below is taken from a multi-day pattern of an actual customer production application running at scale. It depicts CPU utilization over time [Figure 1]. The patterns in this chart are ones we see repeated across many customers.

This organization is paying for 100% of the CPU provisioned in this application cluster, but using only 10%-30% of the compute capacity they pay for. The remaining 70%-90% of that compute remains unused, delivering no work.

Nobody gets to this level of utilization by accident. People usually arrive here because when they tried to push it harder, they didn't like the service levels they got as a result. This begs the natural question: What can we do to improve the system from a cost perspective?

How to leverage improved speed, consistency, and elasticity

I'm going to take three things that Azul’s JVMs improve in applications – speed, consistency, and elasticity – and lay them on top of this picture to show the improved effects.

Step One: Let's start with speed

Suppose it takes "Y" amount of CPU to do a set amount of work over "X" amount of time using a given JVM. Another JVM that can execute the same application logic faster can shrink the “Y” amount of CPU needed to get the same amount of work done over time. If we had the same number of cloud pods carrying the same amount of work, but using the Azul Zing JVM instead of a “vanilla” OpenJDK JVM, each pod would use less CPU because the Azul Zing JVM simply executes the same application code faster [Video].

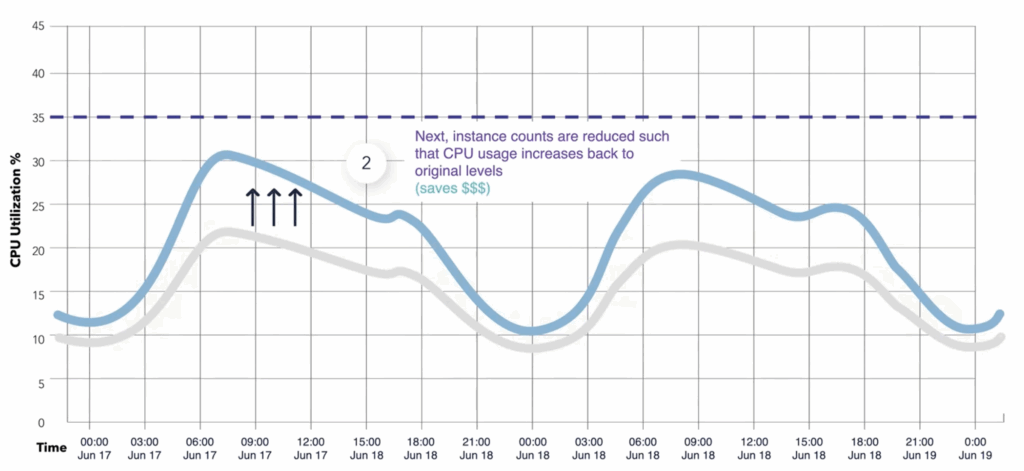

Now we’re doing the same amount of work in the same time but using far fewer CPU cycles due to the Azul Zing JVM’s more efficient code execution. The obvious and immediate thing to do then is to turn off enough instances or Kubernetes pods or instances so the blue line rises back to the level the green line was at before [Figure 2].

You can do this carefully and incrementally if you want: Once you've seen that reduction in CPU utilization, you can start reducing from e.g. 10 running nodes to nine, eight, maybe even seven pods, until you reach the same CPU use levels you had before, while keeping service levels unhurt.

| How to run a fair CPU consumption comparison test |

|---|

| This is sometimes a hard realization to accept, that just changing the JDK from OpenJDK to Azul Platform Prime can have such a dramatic effect on the outcome. To prove it out, a 50:50 side by side comparison is often useful. For example, if you have a 20-pod cluster, and traffic is evenly load balanced across all pods, you can run half of them on “vanilla” OpenJDK and the other half on Prime. That way, you can get a reasonable apples-to-apples comparison of the CPU consumption each JVM type demonstrates while carrying equivalent load. However, load balancing in many environments is not “even”. In fact, in many service-mesh based environments faster pods will end up being assigned more work since they empty their request queues more quickly. This means that a test like the above may result in the load not being equivalent across the JDK configurations. To account for different pods taking on different amounts of work, one need records of both the CPU levels and the throughout levels experienced by each “half”. With that information, you can compare the “CPU% per work rate” that the two different JVM choices actually see under a combined load. |

This (leveraging Speed) is just the first (and often the quickest and easiest) step in extracting savings with the Zing JVM. If you look at this chart’s shape again, it looks the same as where we started. It still has multiple inefficiencies that can be addressed.

- Yes, the total number of vCPUs used is already reduced – because code is faster, we’re able to carry the same workload, using the same levels of CPU utilization, while using fewer vCPUs. We made each vCPU we pay for “worth” more, but we didn’t change the shape of the chart…

- But Peak CPU % utilization is low – Even at peak utilization, we only see ~30% utilization rate of the provisioned (and paid for) CPUs. In other words, even at peak utilization, more than two-thirds of the $s spent are still not being used.

- And CPU % utilization is uneven, averaging well below the peak – The CPU utilization varies between ~10% and ~30%. Outside of peak use load levels, utilization is even lower and the waste (amount of paid for but not used CPU) is even higher.

There's a reason organizations often settle for such results. They've usually learned [the hard way] that if you push peak utilization higher, things won't do well. They may have also learned that if you try to turn off resources during low load and low utilization times and later turn them back on when load levels grow, things don’t go well either. Their SLA indicators will show bad things, or your users will complain, so they don't push or shape their utilization to levels that cause those things to happen.

Step Two: Leverage improved consistency

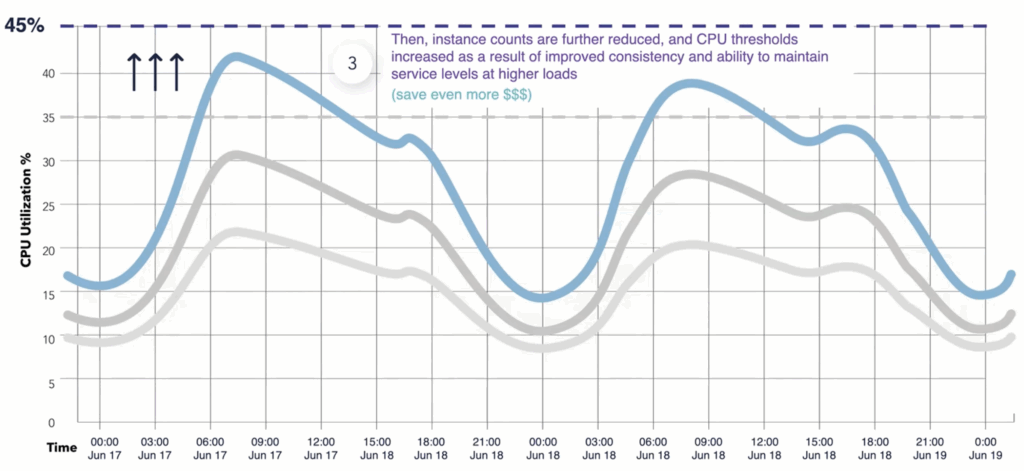

The Zing JVM is specifically engineered to execute the application code with more consistent performance (fewer hiccups, pauses, “temporary slowdowns”, or stalls at a given load level) than “vanilla” OpenJDK JVMs do. As a result, each instance and each pod can usually handle a higher CPU utilization before such “bad things” start to happen, and the frequency of service-level-affecting artifacts degrades behavior to unacceptable levels.

Leveraging this improved consistency, the next step to reduce costs is to turn off additional pods such that peak CPU utilization will rise above its previous target. We can achieve significant savings by moving this target forward by even a seemingly small increment. For example, reducing pod counts to raise peak CPU utilization from ~30% to ~40% would reduce the overall pod count by ~25%, with each remaining pod carrying 33% more work than it did before.

This is where Azul’s investment in JVM consistency over the years really pays off, because it allows us to push those CPU targets higher than they are today, resulting in large savings [Figure 3].

| How to evaluate how an improved JVM can be pushed to higher utilizations |

|---|

| To see how much you can save by moving target utilization levels higher, experiment by raising the CPU utilization target incrementally and cautiously. This is usually best done in small steps, like 5% at a time. Move up a step and evaluate the resulting service levels after each such “push,” and back off to the previous good levels if you end up pushing too hard and seeing service levels degrade below those the “vanilla” JDK sees at it’s established target utilization levels. While you’ll want to evaluate how far you can push with this new and improved JDK setup, you still need stop short of that line in production. Once the breaking point is established, some “padding” (e.g. 5%-10% below the target level that actually causes degradation) is typically added in the lasting configuration. |

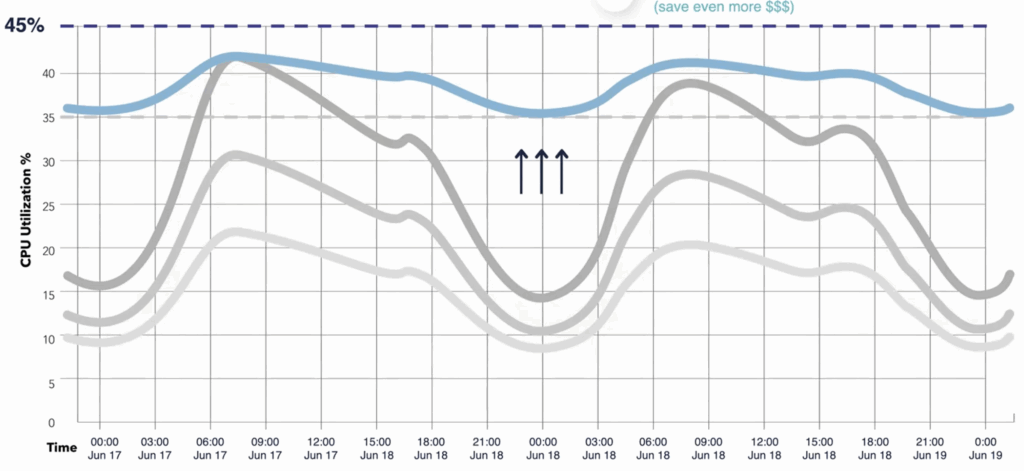

Step Three: Enable and increase elasticity

Even with the first two improvements (speed, consistency) in place, if the utilization still varies significantly over time, a significant inefficiency remains that can be addressed. Figure 4 above still has peaks and troughs, with large utilization differences between them. There is a lot of waste at the points in time where resources aren't used at the target peak utilization level, such as at midnight in this chart, where less than half the resources are needed but instances were not turned off even when utilization is very low.

We find that many application utilization charts have this shape. Where we see it, it often indicates that elasticity and autoscaling are not, or only partially, embraced. For example, many organizations that use autoscaling as a form of insurance against extreme peaks will avoid using it “normally” by keeping a conservative minimum number of pods that they won’t drop below.

Such variability in utilization is usually a result of a lack of willingness to turn off resources when they are not needed. In modern auto-scaling-capable deployment environments (e.g. k8s), this lack of willingness is usually attributed not to what happens when pods are turned off (at low utilization, this is typically harmless), but to the harmful behaviors seen when new instances start up again. Such “warmup problems,” where temporarily slow or glitchy behavior occurs early in the life of a new JVM instance, lead to poor service levels for client traffic handled by those instances until they are fully warmed up and “running at speed”. These behaviors usually manifest as increased error rates, timeouts, or response time artifacts. And those glitches are usually not a function of load, but a function of “slow” or “not yet warmed up” instances serving traffic.

This is where the third step in the “better JVM” based cost reduction comes in: With Azul’s Prime JVMs running your applications, elasticity can be fully enabled without incurring the service level degradations so often associated with “warmup” and autoscaling.

The choice of JVM is as powerful as the choice of CPU when it comes to containing or reducing cloud compute costs.

“Vanilla” OpenJDK JVMs only warm up effectively by handling actual traffic, as they optimize code only after observing it being exercised enough times (handling enough client traffic) to make optimization choices. Effectively, each newly started pod must “hurt” enough traffic to learn what to optimize and how, and to reach a point where it no longer hurts traffic.

Azul’s Platform Prime product dramatically improves the warmup behavior of individual pods by combining techniques built explicitly for that purpose. With Azul’s ReadyNow and Optimizer Hub technologies, fleets of JVMs can share hard-earned warmup experience, allowing newly started pods to “prime” themselves with prior knowledge and avoid hurting traffic to “learn” what and how to optimize. Azul’s Optimizer Hub uses its Cloud Native Compiler capability to efficiently optimize code across the entire fleet, such that individual JVMs in newly started pods don’t have to each do the heavy lifting of optimization during startup. These techniques result in powerful optimizations being applied even before traffic arrives on newly started pods, and in the elimination of traffic experiencing slow, not-yet-warmed-up pods.

The Azul Optimizer Hub automatically orchestrates and amortizes optimizations, allowing the production environment to efficiently and organically self-optimize. These production optimizations appear with no change to the Software Development Lifecycle (SDLC). As new versions of an application get rolled out, early instances (canaries usually) automatically establish the experience and optimizations that subsequent pods then leverage when they start as a result of rollouts and autoscaling.

This fleet-wide optimization capability directly addresses the negative service-level artifacts usually associated with “warmup” and autoscaling. It makes autoscaling both practical and beneficial for most applications and services.

With the hurdles for autoscaling removed, elasticity can be fully enabled and widely embraced. Applications that previously avoided autoscaling can now enable it. Applications that have only autoscaled conservatively (e.g., only autoscaling to address rare peaks by using high minimum pod counts) can shift to continuous autoscaling [Figure 4]. The deep troughs in utilization during low-load times can be eliminated by safely turning pods off to raise utilization during low load, and safely turning them back on (without negative service-level impacts) when load returns.

| Additional cost management benefits that arise from practical elasticity |

|---|

| When elasticity can be fully enabled without incurring “warmup” service-level impacts, new options for using cloud instance consumption and pricing models (such as reduced reservations and wider use of spot instances) arise that may not have been practical before. These can reduce the average cost of a vCPU hour, resulting in further savings. |

Summary

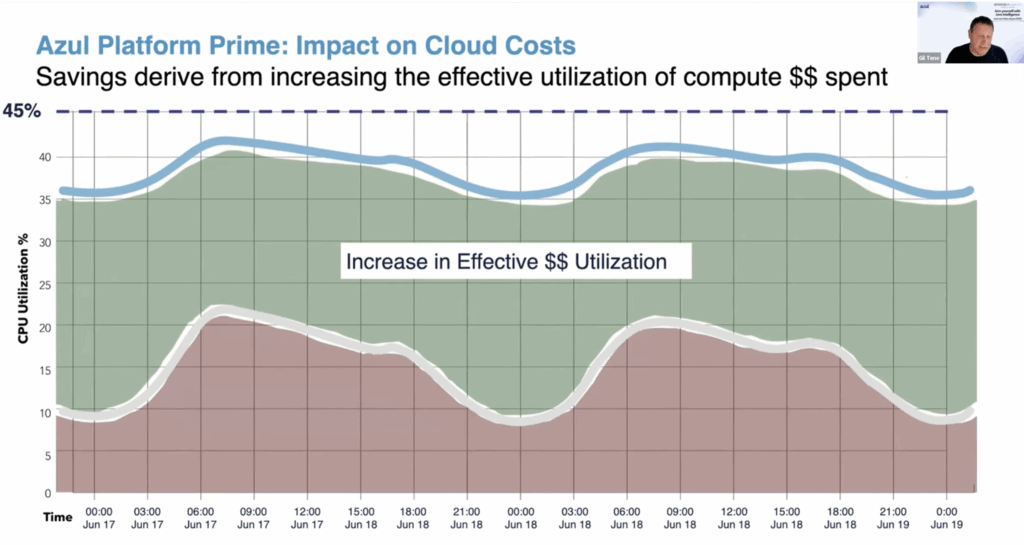

By running applications and services on a JVM that is faster, more consistent, and eliminates warmup-related service level artifacts, compute cost can be significantly reduced. Azul’s Zing JVM and Prime Platform deliver real-world savings by increasing the effective financial utilization of cloud compute spend [see Figure 5].

| “If you want to reduce cost, … focus on cost reduction.” |

|---|

| Usually, when evaluating the potential for cost savings, we want to show cost reduction as quickly as we can with the least amount of effort invested. Through experience, we've learned that there are some pitfalls and distractions along the way, and that some focusing steps can help what we think of as a “pilot” for cost savings purposes. It is important to remember that while the speed, consistency and elasticity features Azul Prime offers could be used for cost savings, they can also be used for other purposes. If you goal is cost reduction, it is important to avoid getting distracted or wrapped up in chasing those other benefits just because they are “cool”, or even because they may be otherwise useful. For example, as the common case and outlier latencies often get better in early tests at the same utilization levels, people may start focusing on that benefit and lose their focus on the cost benefit goal. Sometimes we even have to remind them that if what we intend is to measure is cost benefit, we should be happy to sacrifice any improved latency or response time behaviors for increased utilization levels. Those metric speed and consistency benefits often mean “you should be pushing harder, turning off more pods”. We may (and usually do) have some speed and consistency benefits (e.g. better median or average response times, much lower outlier magnitudes and frequencies) remaining even after savings are realized and after we’ve pushed as hard as we are willing to go. But in the context of a cost-reduction exercise, we view those as a “bonus”. They are not the goal. If what you want is better latencies, fewer outliers, or more “wins” or “matches”, and if cost reduction is not your main purpose, Azul’s Prime is an excellent solution for those goals as well. But that is a subject for a different blog entry. |

Follow my blog series

In my next blog post, I will focus on the JVM’s impact on application performance. In my third article, I will talk about Azul Optimizer Hub, a feature of Azul Platform Prime. Please follow for more information you can use.