You've heard about Azul Optimizer Hub and are wondering how it fits into your AWS architecture. As an AWS architect, you're likely familiar with the performance challenges of running Java workloads in containerized, cloud-native environments — particularly the startup delays and resource overhead that comes with JIT compilation. Optimizer Hub is a Kubernetes-native solution that runs on Amazon EKS and fundamentally changes this equation, enabling optimal application performance from the first request. Here's everything you need to know about integrating this game-changing technology into your AWS environment.

Traditional Java executes code in slower interpreted mode until it can build an optimization profile. This means it can only start optimizing once the application takes traffic and touches the critical code paths. So just when you are scaling out for a large bump in traffic, your machines are running at their slowest and are splitting their available CPU power between handling requests and performing expensive JIT optimizations.

Using Optimizer Hub, Java applications can reach full speed more quickly and with minimal client-side CPU load.

| What Is Optimizer Hub? |

|---|

| Optimizer Hub is a scalable set of services external to the JVM that enables better application performance and operational efficiency in deploying and administering Java fleets running on Azul Platform Prime’s Zing JVM. |

Optimizer Hub provides two services that solve the traditional Java warmup problem:

- ReadyNow Orchestrator: Monitors usage patterns across your entire fleet to build optimization profiles that drive compilations on the JVM. Newly started JVMs skip the profiling stage and compile methods as soon as they're initialized, enabling much of your JIT compilation to happen during application initialization before taking traffic.

- Cloud Native Compiler (CNC): Provides server-side optimization by offloading JIT compilation to a separate, dedicated JIT farm. CNC caches optimizations to avoid repeating the same optimization hundreds of times, allowing clients to devote 100% of their CPU to handling requests without reserving capacity for initial JIT compilation spikes.

Used together, these services give you faster time to full speed, smoother CPU utilization during warmup, and less wasted capacity. And unlike other warmup technologies like Graal Native Image, Optimizer Hub can be used on any JDK version and can handle all Java code patterns — and requires no changes to your code.

What’s the architecture?

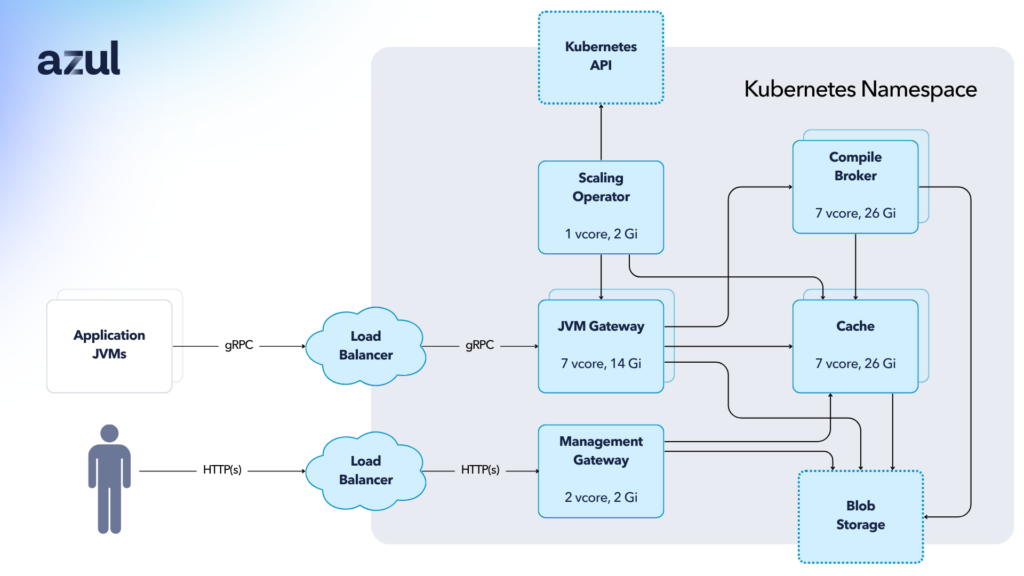

Optimizer Hub is shipped as a Kubernetes cluster that you run in your VPC, usually in Elastic Kubernetes Service [Figure 1].

Important details:

- The best way to install is using our Helm charts (version is v3.8.0 or newer).

- All images are on dockerhub but most customers download these into their internal artifact management systems and then update the Helm charts to point to the new location.

- The operator takes care of all scaling and requires permission to call into the Kubernetes API. Optimizer Hub does not use HPA scaling.

- Optimizer Hub requires a connection to S3. You can give the required nodes RW permission to the S3 buckets or set up AWS service accounts to manage access.

- Optimizer Hub is a shared service where one instance can service all the JVMs running in that availability zone. You do not need a separate instance of Optimizer Hub for each application. The Optimizer Hub instance should ideally be in the same availability zone as the applications it is serving.

- Optimizer Hub provides full high availability and JVMs can function even if access to Optimizer Hub is lost completely.

Installing on EKS

Infrastructure Requirements:

- Instance Types: On-demand or reserved EC2 instances (no spot instances)

- Sizing: Minimum 8 vCores and 32GB RAM per node

- Recommended Instances: m6 or m7 instance families

- Deployment Options: Install in existing EKS cluster or create dedicated cluster using provided eksctl script

The eksctl script creates the following in your AWS account:

- CloudFormation stacks for the main EKS cluster and each of the NodeGroups in the cluster.

- A Virtual Private Cloud called eksctl-{cluster-name}-cluster/VPC. If you chose to use an existing VPC, this is not created. You can explore the VPC and its related networking components in the AWS VPC console. The VPC has all of the required networking components configured:

- A set of three public subnets and three private subnets

- An Internet Gateway

- Route Tables for each of the subnets

- An Elastic IP Address for the cluster

- A NAT Gateway

- An EKS Cluster, including four nodegroups with one m5.2xlarge instance provisioned:

- infra - for running Grafana and Prometheus

- opthubinfra - for running the Optimizer Hub infrastructure components

- opthubcache - for running the Optimizer Hub cache

- opthubserver - for running the Optimizer Hub compile broker settings

- IAM artifacts for the Autoscaling Groups:

- Roles for the Autoscaler groups for the cluster and for each subnet

- Policies for the EKS autoscaler

Autoscaling

Optimizer Hub has two modes – ReadyNow-only and full mode (with ReadyNow and CNC). ReadyNow-only mode does not require many resources and does not scale. CNC, on the other hand, scales up a significant number of resources in the short periods of time when JVMs are being started, then scales them down as compilation requests taper off.

Unlike a traditional HPA that scales based on CPU/memory metrics, the Optimizer Hub operator scales gateway, compile-broker, and cache components based on compilation workload. The system monitors compilation queue depth and scales resources to match incoming compilation requests with processing capacity.

Load balancing and high availability in Optimizer Hub

In production systems, you must front your Optimizer Hub instances with a load balancer or service mesh. JVMs connecting to Optimizer Hub need a stable, single entry point to communicate with the service. You can use a DNS-based load balancer (i.e. Amazon Route 53) or Kubernetes service mesh (i.e. Istio). Optimizer Hub gateways include standard readiness checks you can use to determine when to send traffic to the cluster.

| Note |

|---|

| We recommend having at least two instances of Optimizer Hub in production setups. Normally you would have one instance in each availability zone to reduce latency, with each instance serving as a backup to the instances in other availability zones should those instances go down. |

Optimizer Hub instances can be configured to automatically sync ReadyNow profiles between each other; so if the nearest instance for an application is unavailable, the other instances have the profiles needed to ensure a smooth warmup.

If Optimizer Hub becomes unavailable, newly started JVMs continue operating, but with slower warmup and in some cases lower code speed.

- ReadyNow profiles will not be available, so the JVMs can’t front-load JIT compilation too early in the cycle. This can lead to higher CPU load and lower response times when the application takes traffic.

- Cloud Native Compiler will not be available, meaning all compilations happen locally. You can configure the JVMs to use a lower level of compilations if CNC is not available to minimize the impact of doing compilations locally.

Try it yourself

Optimizer Hub is free to use with any licensed instances of Azul Platform Prime. Azul Platform Prime Stream Builds are available for evaluation and testing. Our Java Performance Experts are available to help you get the greatest customer experience and the lowest AWS cloud cost possible using Azul Platform Prime.