Azul’s whole focus is developing JVMs. Azul Platform Prime is ideally suited to deliver better performance and reduced cost for the deployment of Java-based microservices.

In this post you will learn:

- You can achieve faster Java warmup for your microservices-based applications

- A superior JIT compiler can further reduce warmup time

- A superior garbage collector can eliminate pauses that can negatively impact the user experience

Azul Platform Prime is based on the open-source OpenJDK source code, which is the reference implementation for the Java SE standard. To deliver better performance than traditional JVMs, Azul replaces parts of the core JVM with alternative implementations. Specifically, this includes the memory management system and part of the Just in Time (JIT) compilation system. Platform Prime improves Java-based microservices performance in three ways:

- Faster warmup with ReadyNow

- Reduced warmup time with the Cloud Native Compiler

- Fewer pauses with the C4 Garbage Collector

Faster JIT compilation improves performance

Java source code is compiled to bytecodes. Bytecodes are instructions for the Java Virtual Machine (JVM). When the JVM starts an application, it must interpret each bytecode, converting it to the necessary native instructions and operating system calls. This interpretation incurs overhead, which causes the code to run at a much slower rate than statically compiled code.

To eliminate this problem, the JVM profiles the code as it interprets the bytecodes, keeping a count of how many times methods are called. The JVM uses this profiling data to identify hot spots in the code, which can be compiled to native instructions using a just in time (JIT) compiler. Over time, most of the code executed by the application will be compiled, but the time it takes to get to this point is called the warmup time of the application.

| About JIT Compilers |

|---|

A traditional JVM employs two JIT compilers called C1 and C2 (sometimes referred to as client and server). Each JIT has distinctive characteristics, which govern application performance.

|

Since JDK 7, both JITs can be used for the same application through tiered compilation. C1 is used initially; C2 is used as the application runs longer to provide increased performance.

Azul Platform Prime replaces the C2 JIT with a new, improved version called Falcon [Figure 1]. The Falcon JIT compiler is based on the open-source LLVM compiler project, which is supported by numerous corporations and individuals including Intel, NVidia, Apple, and Sony.

1. Reduce warmup time with ReadyNow

The issue of application warmup is exacerbated by the fact that each time an application starts, the JVM has no knowledge of what might have happened during previous executions. The JVM must go through the same profiling phase, performing the same analysis and compiling the same sections of code. For applications that need to be restarted frequently, this is problematic.

An obvious way of solving this problem would be to take a snapshot of the compiled code when the application has reached a steady state and when all necessary code has been compiled. When the application is restarted, this snapshot could be reloaded, and the application could continue as if it had not stopped (from a compilation point of view). Unfortunately, things are not as simple as this (the implementation would actually be complex, even though it sounds simple). The definition of the JVM places restrictions on what can happen when it starts, specifically in class loading and initialization. Several other issues make this approach impractical.

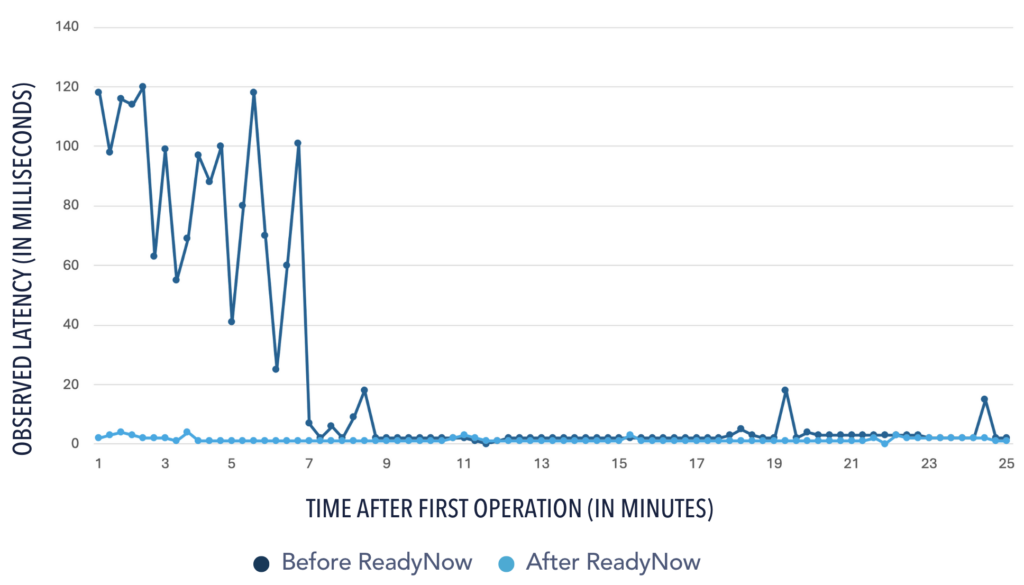

ReadyNow, a feature of Azul Platform Prime, accelerates application warmup in production environments from the beginning. At this point, a profile of the application’s JIT status is recorded. This profile records details of the classes that are currently loaded, classes that are initialized, instruction profiling data (like the data used by the JVM to decide which sections of code to compile), and speculative optimization failures. A copy of the compiled code is also saved.

When the application starts again, it uses the profile to have the JVM and JIT perform all the work it would normally do during the warmup phase of the application; in this case, this can all happen before the application starts executing code in the main () method. This all but eliminates the warmup phase so the application can start running at very nearly full speed when it starts [Figure 1].

2. Further reduce warmup time with the Cloud Native Compiler

JIT compilation delivers the performance necessary for modern enterprise applications, often out-performing natively compiled code, but performance comes at a cost. The JIT compilation workload must share resources with the application so it can perform transactions, which both increases warmup time and degrades application performance during warmup.

If we are running in the cloud, with its elastic resources and utility-based pricing model, why not shift the work of the JIT compiler elsewhere?

This is what Azul has done with the Cloud Native Compiler.

The Cloud Native Compiler creates a centralized JIT-as-a-service [Figure 2]. When a JVM needs to compile a method, rather than performing this locally, it sends the details to the Cloud Native Compiler. This enables greater throughput during warmup. The Cloud Native Compiler can also be a log running process that stores results from method compilations. When spinning up multiple instances of a microservice when the same method is requested, rather than compiling it again, the stored code can be returned instantly, further reducing warmup time.

3. Fewer pauses with the C4 Garbage Collector

When a Java application is executed, the JVM handles memory management automatically. The JVM allocates space on the heap when a new object is instantiated and reclaims that space when the application no longer has any references to it. This is the process of garbage collection (GC). The GC also manages the heap, optimizing the availability of space for new objects by periodically compacting the heap, moving objects to make live data contiguous, eliminating fragmentation. GC provides significant advantages over languages like C and C++, which rely on the programmer to explicitly deallocate space used by the application. This explicit deallocation can be the source of many application bugs, particularly in the form of memory leaks or abrupt application termination when the programmer tries to deallocate an invalid or incorrect address.

However, in a traditional JVM, GC also requires application threads to pause to avoid corruption of data as objects are moved during compaction. This effect is two-fold: first, it introduces pauses to an application while the GC performs its work. The length of these pauses can range from milliseconds to hours and the length is directly proportional to the amount of memory allocated to the heap, not how much data is in the heap.

Azul Platform Prime uses a different GC algorithm, the Continuous

Concurrent Compacting Collector (C4). Unlike other commercial GC algorithms,

application threads can continue to operate while the GC is performing its work

(hence the concurrent part of the name).

Second, when these pauses occur cannot be accurately predicted. This introduces nondeterministic behavior to an application. In the context of a microservice, this could lead to clients of a service incorrectly assuming that the service is no longer available even though its JVM is just working on GC. This may cause new service instances to be started, further impacting performance when this is not necessary.

Although other algorithms like Concurrent Mark Sweep (CMS) and Garbage First (G1) perform part of their work while application threads are running, other parts still require application threads to be paused. Both these algorithms will fall back to a full compacting collection cycle if they are unable to meet the memory needs of the application. This full compaction requires application pause times proportional to the heap size.

Unlike traditional collectors that require numerous command line options that are difficult to configure correctly, C4 only needs the heap size to be set. C4 scales from 1Gb to 20Tb of heap space without increasing application pause times.

Summary

Software development is moving to the cloud and using microservices to do this in a flexible,

scalable way. Java, as the most popular programming language on the planet, is an obvious

choice for developing microservices. Java microservices also provide several advantages over

those developed in other languages. Azul Platform Prime includes a modern JVM that uses different internal algorithms for garbage collection and part of the JIT compilation system. When combined with the ReadyNow technology to effectively eliminate the warm-up phase of Microservice performance, Azul Platform Prime becomes a perfect choice for use in modern Java application development on microservices.